Overview

There are multiple methods on the market for calculating the ratio between compute optical modules and GPUs, resulting in different outcomes. The main cause of these differences is variation in the number of optical modules required by different network architectures. The exact number of required optical modules primarily depends on several key factors.

NIC model

Main NICs include ConnectX-6 (200Gb/s, mainly used with A100) and ConnectX-7 (400Gb/s, mainly used with H100).

Meanwhile, the next-generation ConnectX-8 800Gb/s is expected to ship in 2024.

Switch model

Main switch types include the QM9700 switch (32 OSPF ports 2x400Gb/s), totaling 64 channels at 400Gb/s and an aggregate throughput of 51.2Tb/s.

And the QM8700 switch (40 QSFP56 ports, 40 channels at 200Gb/s, aggregate throughput 16Tb/s).

Unit count (scalable units)

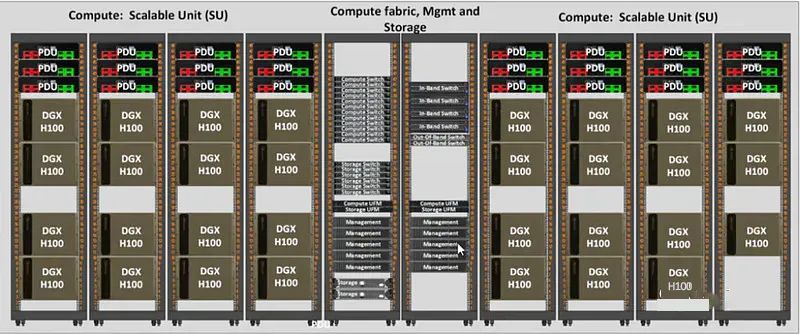

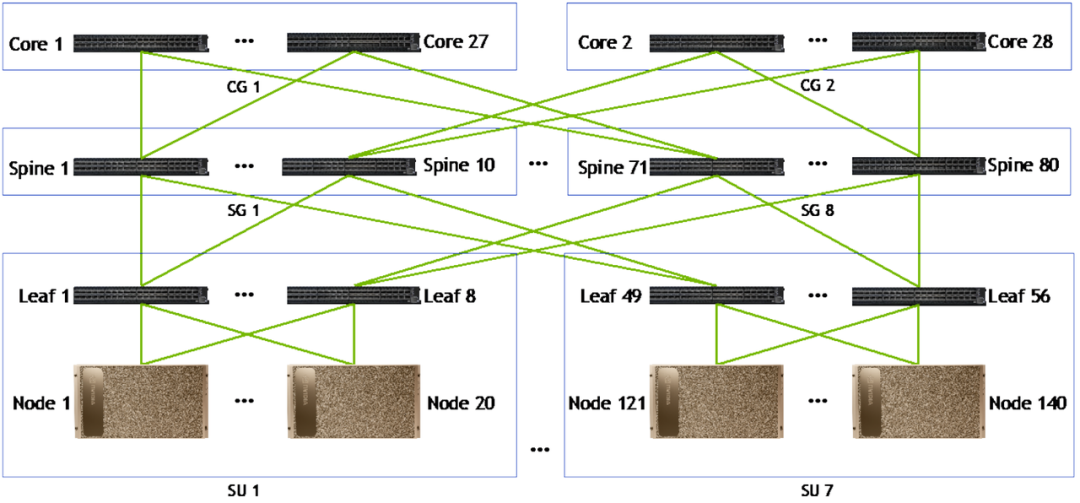

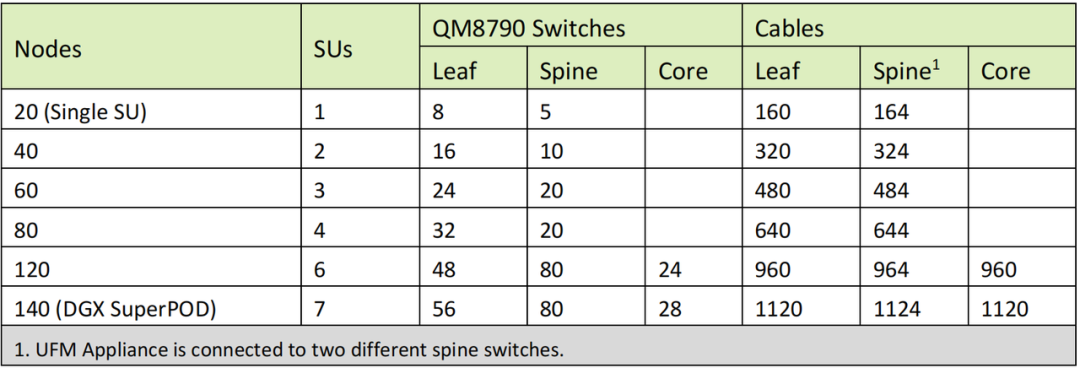

The number of units determines the configuration of the switch network. Small deployments use a two-tier design, while large deployments use a three-tier design. H100 SuperPOD: each unit consists of 32 nodes (DGX H100 servers), supporting up to 4 units in a cluster using a two-tier switching architecture. A100 SuperPOD: each unit consists of 20 nodes (DGX A100 servers), supporting up to 7 units in a cluster; if the unit count exceeds five, a three-tier switching architecture is required.

Optical module demand under four network configurations

Summary of the four common configurations and their module requirements:

- A100 + ConnectX-6 + QM8700 three-tier network: ratio 1:6, all 200G modules.

- A100 + ConnectX-6 + QM9700 two-tier network: 1:0.75 of 800G modules + 1:1 of 200G modules.

- H100 + ConnectX-7 + QM9700 two-tier network: 1:1.5 of 800G modules + 1:1 of 400G modules.

- H100 + ConnectX-8 (not yet released) + QM9700 three-tier network: ratio 1:6, all 800G modules.

Optical module market growth examples: assuming 2023 shipments of 300,000 H100 and 900,000 A100, total demand would be approximately 3.15 million 200G modules, 300,000 400G modules, and 7.875 million 800G modules. Such growth would significantly expand the artificial intelligence data center ecosystem, driving strong demand not only for optical modules but also for high-speed telecommunication PCB used in optical transceivers, switch line cards, and server interconnect subsystems. Under this scenario, the artificial intelligence–related optical module market is estimated at approximately $1.38 billion.

Case 1: A100 + ConnectX-6 + QM8700 three-tier network

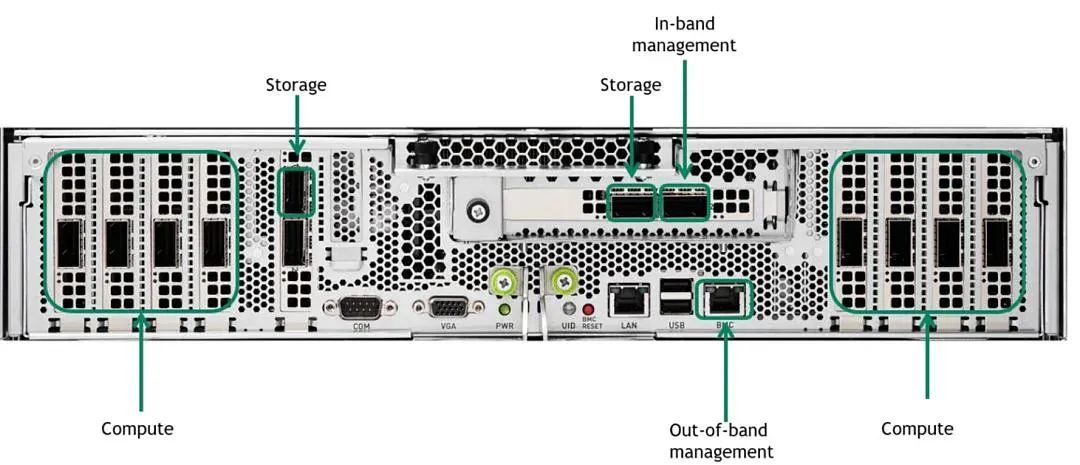

The A100 GPU is designed with eight compute interfaces: four on the left and four on the right. Most A100 shipments pair with ConnectX-6 to achieve up to 200Gb/s connectivity.

In the leaf tier, each node has eight interfaces (ports) and connects to eight leaf switches. Each 20-node group forms a scalable unit (SU). Therefore, in the first tier, a total of 8 x SU leaf switches are required, along with 8 x SU x 20 cables and 2 x 8 x SU x 20 200G optical modules.

In the spine tier, because the design is non-blocking, the uplink rate equals the downlink rate. The total one-way throughput in the first tier is 200G multiplied by the number of cables. Since the second tier also uses single-cable 200G links, the number of cables in the second tier should match the first tier, requiring 8 x SU x 20 cables and 2 x 8 x SU x 20 200G optical modules. The required number of spine switches is calculated by dividing the cable count by the number of leaf switch ports, giving (8 x SU x 200) / (8 x SU) spine switches. However, when there are insufficient leaf switches, multiple links between leaf and spine can reduce the number of spine switches (as long as the 40-port limit is not exceeded). Therefore, for unit counts of 1, 2, 4, 5, the required spine switch counts are 4, 10, 20, 20, and the required optical module counts are 320, 640, 1280, 1600 respectively. Spine switch count does not increase proportionally, but the optical module count scales proportionally.

When the system expands to seven units, a three-tier architecture becomes essential. Due to the non-blocking configuration, the cable count in the third tier remains the same as in the second tier. NVIDIA's SuperPOD blueprint recommends integrating the network across seven units using a three-tier architecture with core switches. Detailed diagrams show the number of switches at different tiers and the cabling required for different unit counts.

With 140 servers participating, the total number of A100 GPUs is 1,120 (140 servers x 8). To support this configuration, 140 QM8790 leaf switches and 3,360 cables are required. This configuration uses 6,720 200G optical modules. The ratio of A100 GPUs to 200G optical modules is 1:6, i.e., 1,120 GPUs to 6,720 modules.

Case 2: A100 + ConnectX-6 + QM9700 two-tier network

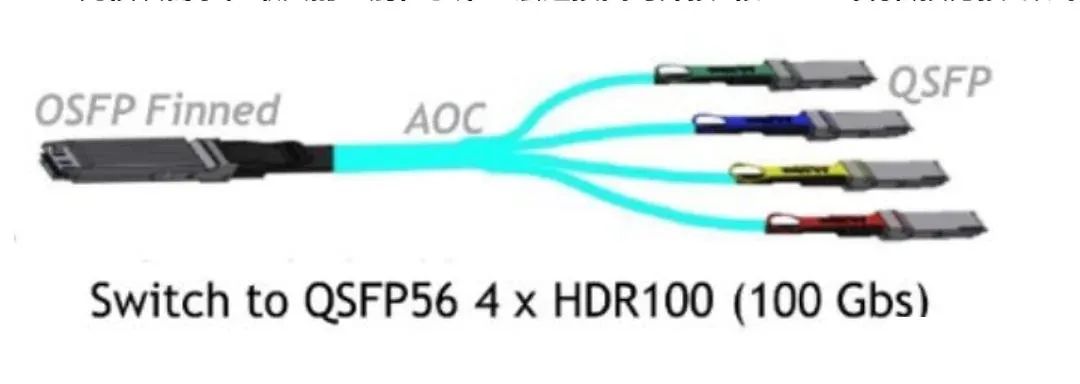

This configuration is not currently the recommended design. Nevertheless, over time more A100 GPUs may connect via QM9700 switches. This shift reduces the number of required optical modules but creates demand for 800G optical modules. The main difference is in the first-tier connections: instead of eight separate 200G cables, a QSFP-to-OSFP adapter is used, with each adapter enabling two connections and achieving a 1-to-4 mapping.

In the first tier: for a cluster of seven units and 140 servers, there are 140 x 8 = 1,120 interfaces. This equals 280 1-to-4 cables, so 280 800G and 1,120 200G optical modules are required. A total of 12 QM9700 switches are needed.

In the second tier: using only 800G links requires 280 x 2 = 560 800G cables, hence 5,600 800G optical modules and 9 QM9700 switches. Therefore, for 140 servers and 1,120 A100 GPUs, the total is 21 switches (12 + 9), 840 800G optical modules, and 1,120 200G optical modules. The A100 GPU to 800G optical module ratio is 1,120:840, simplified to 1:0.75. The A100 GPU to 200G optical module ratio is 1:1.

Case 3: H100 + ConnectX-7 + QM9700 two-tier network

A notable feature of the H100 architecture is that, although the card contains eight GPUs, it is equipped with eight 400G NICs that are combined into four 800G interfaces. This consolidation creates strong demand for 800G optical modules.

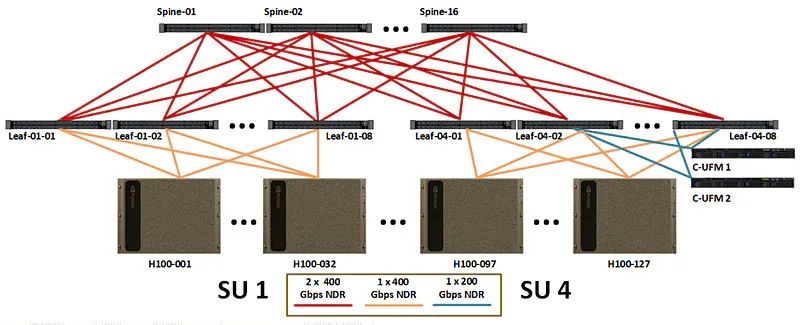

In the first tier, per NVIDIA recommended configuration, server interfaces connect to one 800G optical module. This can be implemented using dual-port connections with two cables (MPO), each cable plugged into a separate switch.

Thus, in the first tier, each unit consists of 32 servers, each server connecting to 2 x 4 = 8 switches. In a SuperPOD with 4 units, the first tier requires 4 x 8 = 32 leaf switches. NVIDIA recommends reserving one node for management (UFM). Because this has limited impact on optical module counts, calculations use 4 devices and a total of 128 servers as a baseline. The first tier therefore requires 4 x 128 = 512 800G optical modules and 2 x 4 x 128 = 1,024 400G optical modules.

In the second tier, switches use 800G optical modules for direct connections. Each leaf switch connects downward at 32 x 400G one-way rate. To keep uplink and downlink rates equal, the uplink requires 16 x 800G one-way rate. This needs 16 spine switches, leading to a total of 4 x 8 x 162 = 1,024 800G optical modules in the second tier. In this architecture, the infrastructure requires 1,536 800G optical modules and 1,024 400G optical modules. For a full SuperPOD with 128 servers (4 x 32), each server with 8 H100 GPUs, the total H100 GPU count is 1,024. The GPU to 800G optical module ratio is 1:1.5 (1,024 GPUs : 1,536 modules). The GPU to 400G optical module ratio is 1:1 (1,024 GPUs : 1,024 modules).

Case 4: H100 + ConnectX-8 (not yet released) + QM9700 three-tier network

In the hypothetical scenario where the H100 GPU NICs upgrade to 800G, the external interfaces would expand from four to eight OSFP ports. Inter-tier links would therefore use 800G optical modules. The basic network design remains the same, with the only change being replacement of 200G modules by 800G modules. Within this architecture, the GPU-to-module ratio remains 1:6, consistent with the initial scenario.

Summarizing the above: if 2023 shipments were 300,000 H100 GPUs and 900,000 A100 GPUs, total demand would be 3.15 million 200G modules, 300,000 400G modules, and 787,500 800G modules. Looking to 2024 with 1.5 million H100 and 1.5 million A100, expected demand would be 750,000 200G modules, 750,000 400G modules, and 6.75 million 800G modules.

For A100 GPUs, connections are distributed between 200G and 400G switches. For H100 GPUs, connections are distributed between 200G and 400G switches.

Conclusion

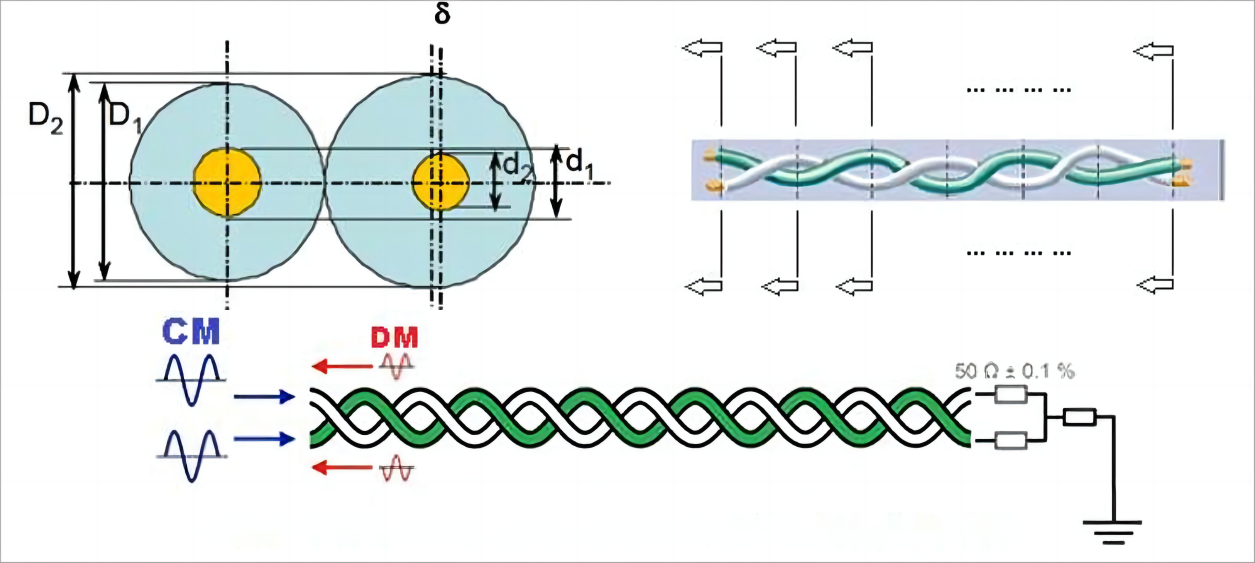

As technologies evolve, network deployments have seen the emergence of 400G multimode optical modules, along with active optical cables (AOC) and direct attach cables (DAC), enabling higher bandwidth density and improved power efficiency in data center and enterprise networks. These solutions are increasingly adopted to support short-reach and medium-reach interconnects, reflecting a broader shift toward modular, scalable, and cost-optimized high-speed connectivity architectures.

Looking ahead, AI-driven Changes in Optical Modules are expected to further accelerate this evolution. The rapid growth of AI workloads, large-scale model training, and distributed inference is reshaping performance requirements for latency, throughput, and signal integrity. As a result, next-generation high-speed solutions will continue to evolve beyond 400G, integrating smarter modulation, enhanced thermal management, and tighter hardware-software co-design to meet the escalating demands of AI-centric network infrastructures.